Good morning fellow AI enthusiast! This week's iteration focuses on active learning, probably the future of training your AI models. While large-scale models like ChatGPT and vision models have captured our attention, the secret lies not only in the volume of data they consume but also in the quality.

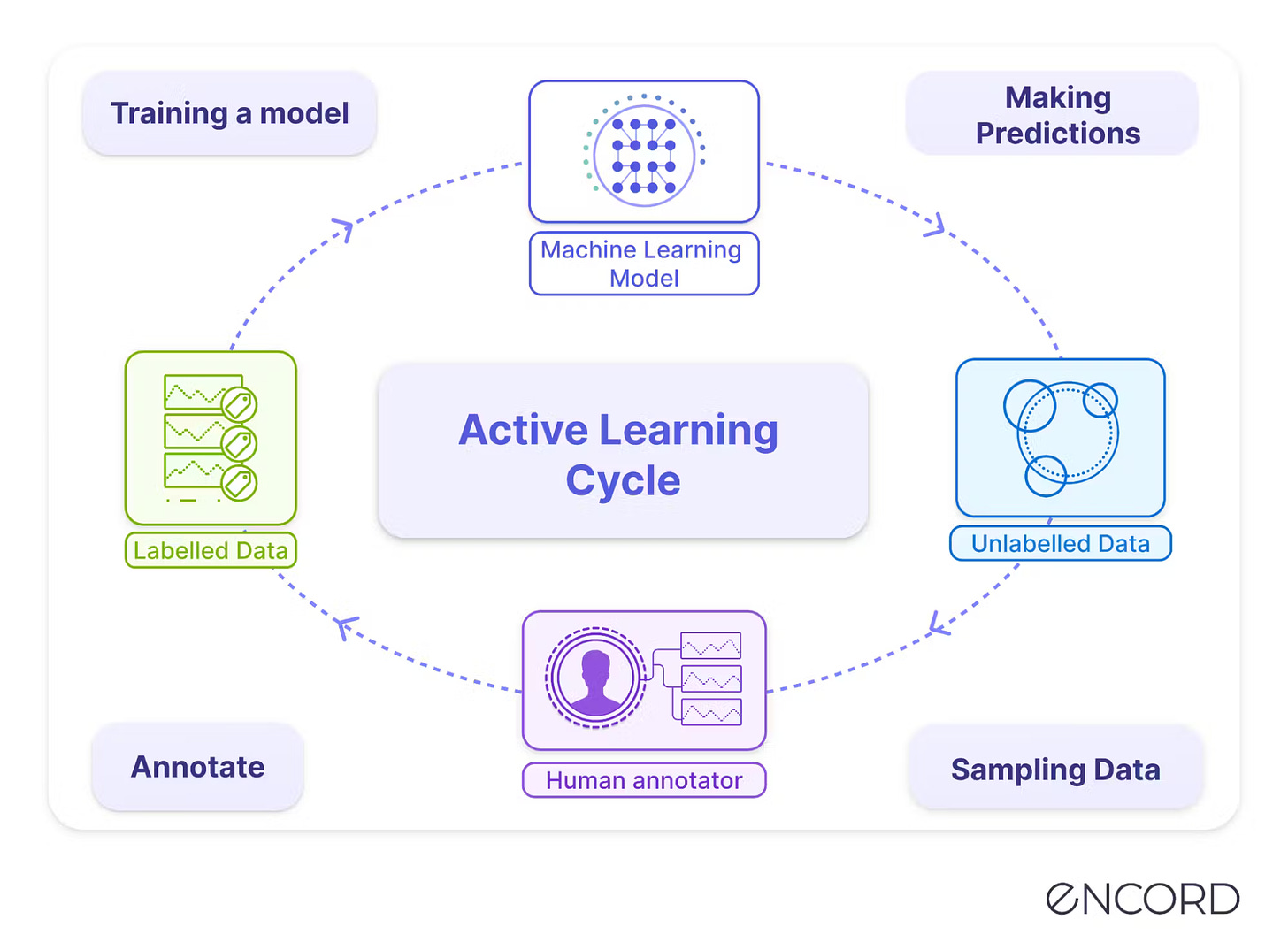

Active learning allows you to optimize dataset annotation and train the best possible model using the least amount of training data. By leveraging a supervised learning approach, you can start with a small curated batch of annotated data, then utilize your model to label unseen data. By evaluating prediction accuracy and employing acquisition functions and query strategies, you select the most informative data for annotation, enhancing the model's performance.

This iterative process saves time and resources by annotating fewer images, delivering an optimized model. Active learning empowers you to analyze your model's prediction confidence, requesting additional annotations for low-confidence predictions while eliminating the need for more data with high-confidence predictions.

Learn more about active learning and witness its practical application through an innovative tool developed by my friends at Encord in the article or video:

We are incredibly grateful that the newsletter is now read by over 12'000+ incredible human beings counting our email list and LinkedIn subscribers. Reach out to contact@louisbouchard.ai with any questions or details on sponsorships. Follow our newsletter at Towards AI, sharing the most exciting news, learning resources, articles, and memes from our Discord community weekly.

If you need more content to go through your week, check out the podcast!

Thank you for reading, and we wish you a fantastic week! Be sure to have enough rest and sleep!

Louis