The New AI Rivalries

A study shows devs 19% slower with AI, while xAI and Qwen battle for scale and multimodality.

Happy Friday, everyone! Do you happen to have a plan for tonight or this weekend? I got you... Well, for five minutes!

Here’s a recap of all the news I covered in the daily videos I now post on YouTube (and all other social media platforms that offer Shorts).

You can watch them directly on YouTube, Instagram, Tiktok, almost every day, or keep reading for the quick weekly recap.

TL;DR: My goal with these videos is to cover what is new, highlighting why it’s relevant, with the side quest of figuring out if it’s just hype or not (for this, I also need your help with your thoughts in the comments or replies, telling me if you agree or not!).

Here’s what happened this week, and why it matters (or does not):

1️⃣ AI coding feels magical… until you time it

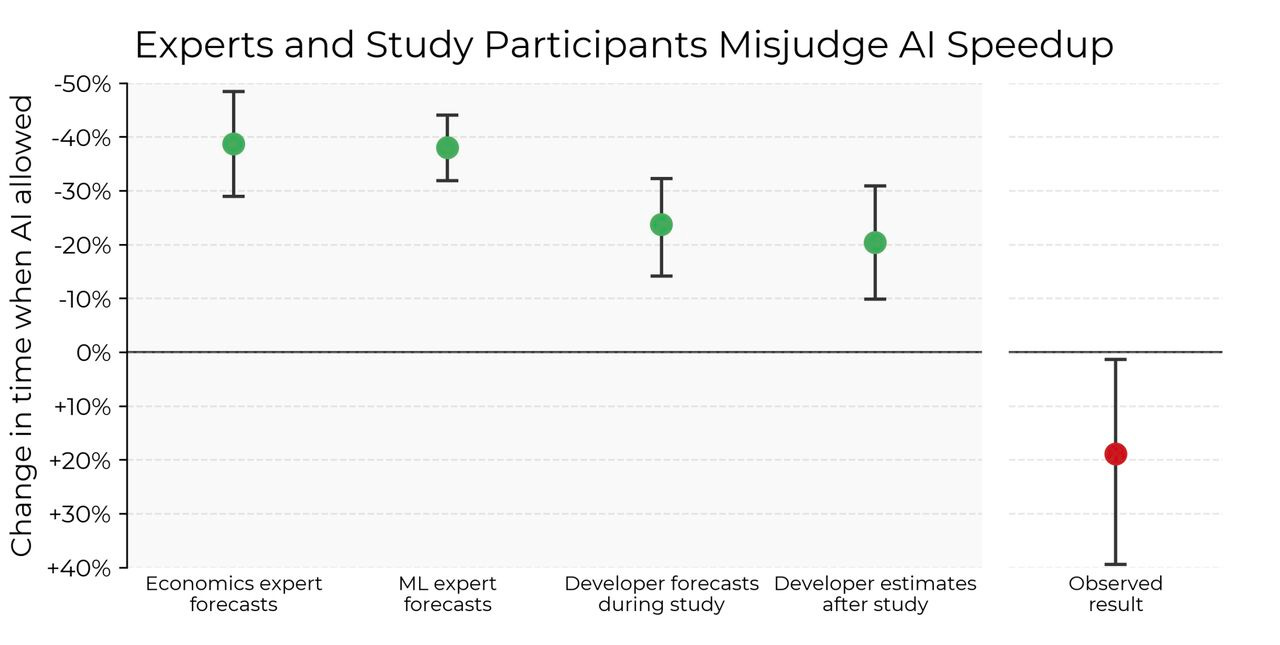

A new controlled study showed that experienced open-source devs were actually 19% slower using AI tools like Cursor Pro and Claude 3.5/3.7. Even though it felt faster, the tools added friction in complex codebases — mismatched suggestions, context juggling, cognitive overhead.

Key details (caveats): Only 16 devs were studied, all very advanced (5+ years in one repo). Small sample, but notable noise!

Why it matters (or doesn’t): The signal is strong enough to question the “AI = instant productivity” hype. Test these tools in your own workflow before assuming speed gains.

2️⃣ xAI’s Grok 4 Fast: 2M tokens + cheaper pricing

Grok 4 Fast just launched with a 2,000,000-token context, claims of up to 2.5x GPT-5 API speed, and lower “thinking token” usage. It’s multimodal, optimized for tool use, and priced aggressively: $0.20 per 1M input, $0.50 per 1M output.

Key details: xAI says this makes it ~23–25x cheaper than competitors and ~47x cheaper than Grok 4 due to shorter reasoning. Benchmarks show strong text performance.

Why it matters (or doesn’t): Impressive specs, but keep in mind these are xAI’s own numbers. Independent testing will show if it lives up to the claims.

3️⃣ Alibaba’s Qwen3-Omni: all-in-one multimodal

Qwen3-Omni is a 30B model that unifies text, image, audio, and video — with real-time streaming and broad language/speech support. It’s open-sourced in three flavors: Instruct (general), Thinking (reasoning), and Captioner (low-hallucination audio descriptions).

Key details: Strong benchmark results and built-in tool calling. The Captioner model directly addresses accessibility needs by reducing hallucinations in audio descriptions. This is a cool use case of fine-tuning!

Why it matters (or doesn’t): This is one of the most practical multimodal releases yet, showing how fine-tuned models can solve real gaps (like accessibility) in the community.

4️⃣ Qwen3-TTS-Flash: low-latency speech

Qwen also rolled out a new text-to-speech model with 17 voices across 10 languages, ultra-low latency for real-time use, and state-of-the-art speech stability.

Key details: Multilingual quality is strong, but it isn’t open-source. Still, anyone can try it free on Hugging Face.

Why it matters (or doesn’t): A step forward for real-time applications, but closed-source limits its adoption in open research or community tooling.

5️⃣ Qwen3-Max: OpenAI’s newest challenger?

Another Qwen release! The Qwen3-Max series is showing competitive results against top models on coding and reasoning benchmarks. The “Instruct” model is already in preview, while the “Thinking” model is still training with advanced tool use.

Key details: Trained with over 1T parameters on 36T tokens, using a custom stack for efficiency and fault-tolerance. OpenAI-compatible API and competitive pricing ($1.2 / $6 per 1M tokens for 0–32k context).

Why it matters (or doesn’t): This looks like the start of a heavyweight rivalry, with Qwen positioning itself as a serious competitor to OpenAI.

And that’s it for this week’s coverage! Do you know if I missed anything worthy?

Watch the future updates as they come out next week on YouTube, Instagram, Tiktok!

And that’s it for this iteration! I’m incredibly grateful that the What’s AI newsletter is now read by over 30,000 incredible human beings. Click here to share this iteration with a friend if you learned something new!

Looking for more cool AI stuff? 👇

Looking for AI news, code, learning resources, papers, memes, and more? Follow our weekly newsletter at Towards AI!

Looking to connect with other AI enthusiasts? Join the Discord community: Learn AI Together!

Want to share a product, event or course with my AI community? Reply directly to this email, or visit my Passionfroot profile to see my offers.

Thank you for reading, and I wish you a fantastic week! Be sure to have enough sleep and physical activities next week!

Louis-François Bouchard