Fine-Tuning Is No Longer Out of Reach

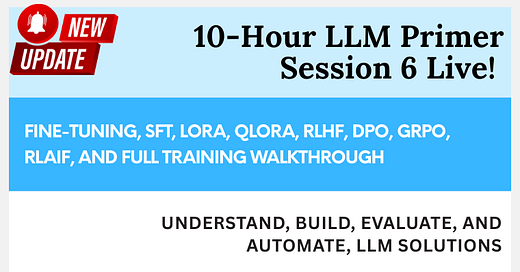

Lesson 6 is LIVE: Fine-Tuning, LoRA, RLHF & Everything You Need to Really Control Your LLMs

Good morning!

I’m super excited to release our final 2-hour session of our "10-hour transition course" from developer to LLM developer!

Lesson 6 is LIVE: Fine-Tuning, LoRA, RLHF & Everything You Need to Re-train Your LLMs

If you’ve already nailed prompting and retrieval in the first two free sessions, you know that clever context takes you far, but not always far enough.

Tone, domain precision, stubborn hallucinations… sometimes you simply have to re-train the model. Especially when using a smaller (local) model.

What Lesson 6 delivers:

When to Fine-tune an open (and closed) model

SFT vs. LoRA vs. QLoRA—when each makes sense and how to avoid wasted compute

PPO, DPO, GRPO, RLHF, RLAIF: a quick crash course in reinforcement learning for language models

Failure-mode avoidance: over- vs. under-fitting, hallucination spikes, catastrophic forgetting

Hands-on walkthrough with Unsloth—yes, even on free GPUs

“The course brilliantly cuts through the overwhelm, then hands you tools you can use the same day.” — Matt Chantry

Unlock the full course to watch Lesson 6 now: https://academy.towardsai.net/courses/llm-primer?ref=1f9b29