How do LLMs work? The Transformer Architecture Explained

GPT, llama, Claude, Bard... How do these AI models work?!

Good morning fellow AI enthusiast! This week's iteration focuses on no other than large language models, like GPT, Llama, Claude, and others. These models all have building blocks in common to understand and generate words. Let's dive into how they do that!

Oh, and if you are using GPT models, you will love the sponsors of this iteration!

[Sponsor] Lacking visibility into your GPT-based applications?

GPT-based applications require a different monitoring approach. Developers must:

Optimize token usage

Ensure API health

Continuously improve prompt quality

Avoid user facing catastrophes

Run efficient and high-quality GPT-based products. Get started with Mona for free by adding two lines of code!

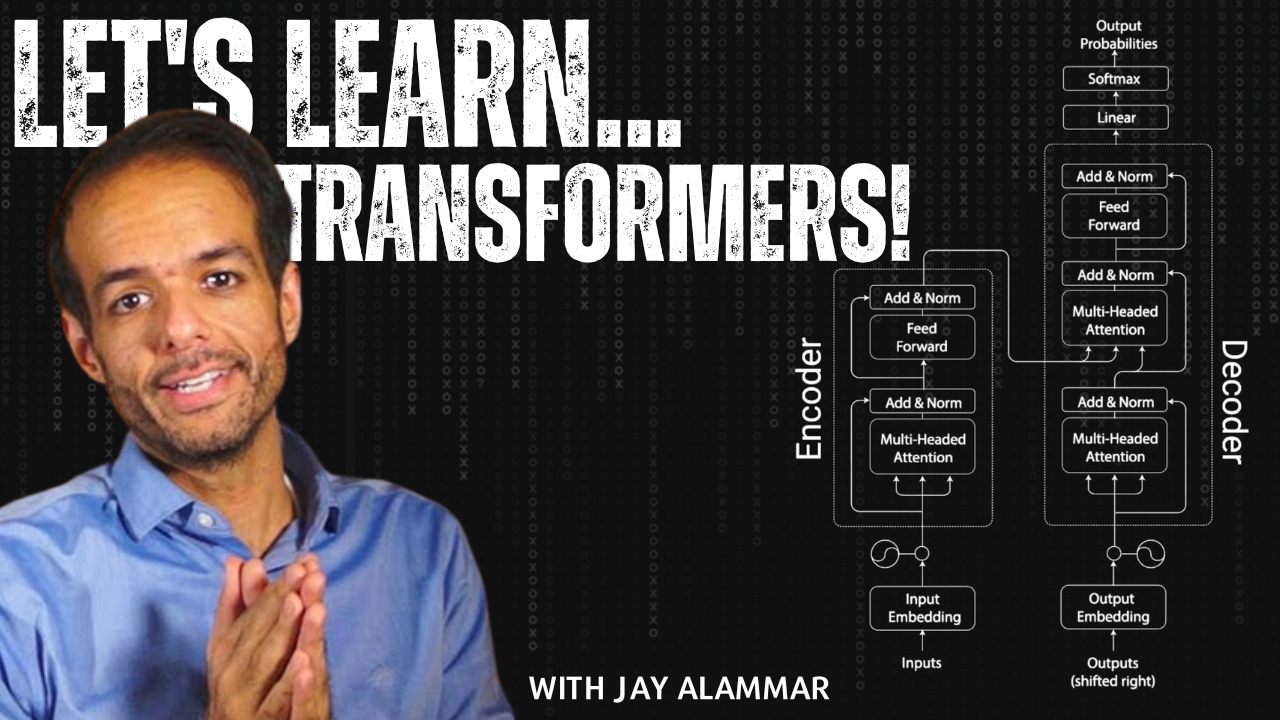

How do LLMs work? The Transformer Architecture Explained with Jay Alammar

A few weeks ago, I had the luck to talk with probably the best educator in the AI space in my podcast: Jay Alammar from Cohere. During the interview, I asked if he could explain the architecture behind all recent large language models as simply as possible.

More precisely, we dived into the generative parts of the transformers' architecture and its different building blocks (e.g., tokenizers, attention, feed-forward networks...). If these don't ring any bell, this video is for you!

After this video, you’ll have a good overview of how LLMs answer questions but, even more importantly, how this type of AI model can predict the next word of a sentence…

We are incredibly grateful that the newsletter is now read by over 12'000+ incredible human beings counting our email list and LinkedIn subscribers. Reach out to contact@louisbouchard.ai with any questions or details on sponsorships or visit my Passionfroot profile. Follow our newsletter at Towards AI, sharing the most exciting news, learning resources, articles, and memes from our Discord community weekly.

If you need more content to go through your week, check out the podcast!

Thank you for reading, and we wish you a fantastic week! Be sure to have enough rest and sleep!

Louis