Good morning fellow AI enthusiast! This week's iteration focuses on one of the most important problems when it comes to using AI models: hallucinations. More precisely, on how to fix them! Before diving into this essential topic, here's a very cool free event from a sponsor I am extremely glad to share with you...

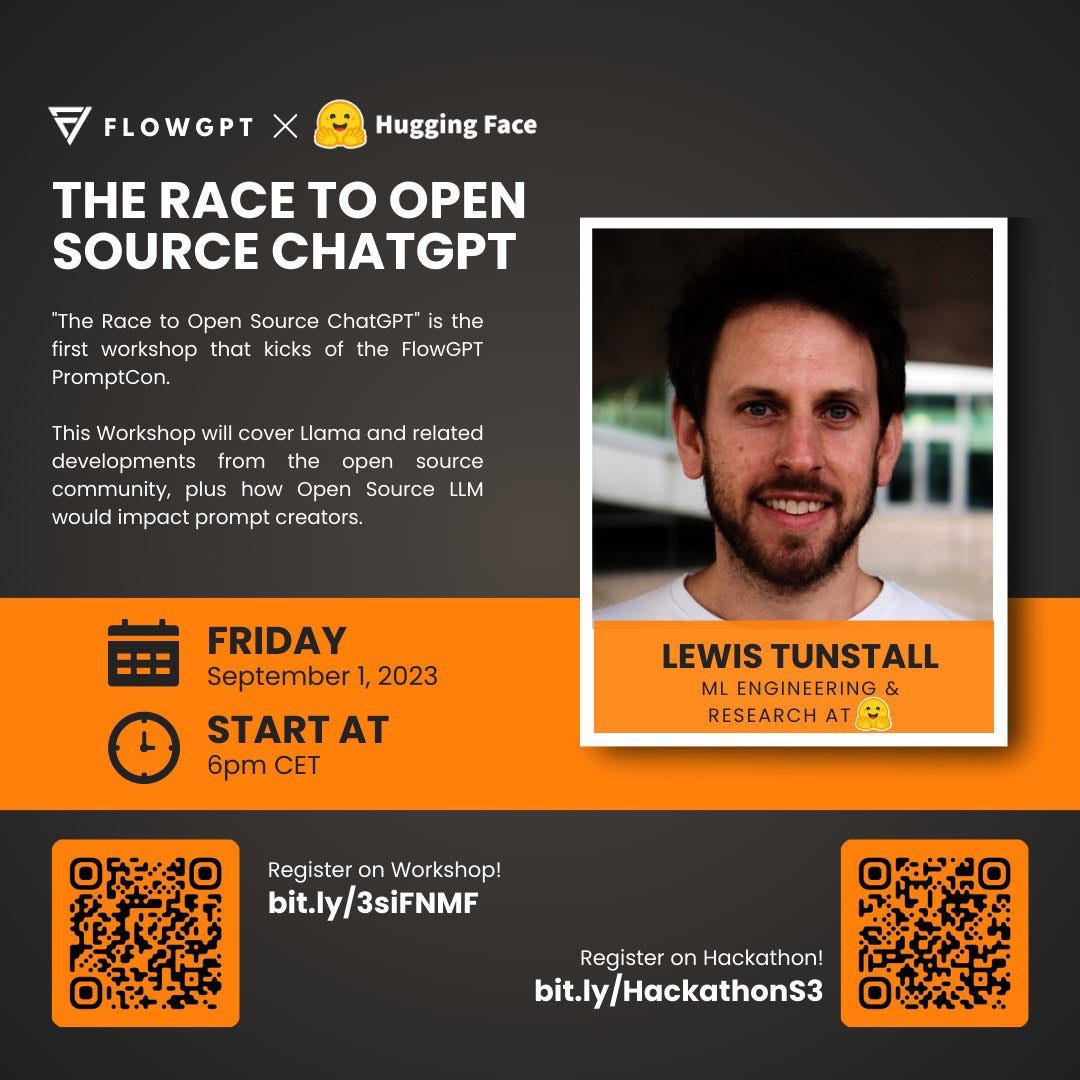

1️⃣ Join the coolest prompt engineering event this year

FlowGPT is hosting the promptCon including a series of workshop and the largest prompt hackathon with $10000+ prizes. On 9/1, Lewis Tunstall from HuggingFace will talk about the race to open source ChatGPT to kick off the promptCon.

2️⃣ Unveiling AI Hallucinations: The Importance of Explainability in AI (XAI)

Hallucinations happen when an AI model gives a totally fabricated answer, believing it is a true fact. The model is convinced it is the right answer (and it is confident), while the answer it gives is actually nonsensical. We saw this behavior from ChatGPT, but it actually happens with all AI models where the model makes a confident prediction that is actually inaccurate. The best way to fix this issue is to understand our model and their decision-making process, which can be done thanks to a field I am excited to talk about called explainable AI (XAI)! Read more or watch the video...

3️⃣ AI Ethics with Auxane

Hey there, fellow AI enthusiasts! Today, we're diving deep into the topic of AI ethics and talking about why explainability is such a crucial aspect.

At its core, explainability means that we understand how and why an AI system makes its decisions. This is important for several reasons. Firstly, without understanding the reasoning behind a decision, we can't trust the system's output blindly. This is particularly important in fields like healthcare or finance, where a wrong decision can have significant consequences. Secondly, without explainability, we can't identify and correct any biases, or errors in decisions that may exist in the system. This is vital to ensure fairness and inclusivity, but also robustness of a system!

However, there are some major challenges to achieving explainability in AI systems. When we talk about explainability, we can see two angles; the technical transparency - usually targeted at engineers or technical experts, and understandability - for all types of users, from a tech company CEO to your baker.

As you can imagine, understandability is a big challenge in itself! You cannot explain the same way things to a highly digitally literate person and to your grandparents. Thus, one of the biggest challenges is variation in digital literacy. Not everyone has the technical background to understand the complex algorithms and processes behind AI. This means that even if an explanation is provided, it may not be easily comprehensible to everyone. This could lead to a lack of trust in the system or even scepticism towards AI as a whole. In turn, this scepticism will impact adoption of technologies, and acceptability.

Another challenge is the diversity of cultures we have the chance to see in our world. Different cultures may have different expectations around explainability, and ways to understand - the same way we know counting can be different depending on the culture. For example, some cultures may value transparency and a clear understanding of decision-making processes through visualisation, and a lot of details, while others may prioritise accurate outcomes over explanations. This means that AI developers must be aware of cultural differences and adapt their systems according to their target population.

In conclusion, explainability is a crucial aspect of AI ethics that enables us to trust and understand AI systems. However, achieving explainability is not without its challenges, including digital literacy and cultural differences. As we continue to develop and implement AI systems, we must strive to achieve an acceptable level of explainability while being aware of these challenges and working to overcome them.

- Auxane Boch (TUM IEAI research associate, freelancer)

We are incredibly grateful that the newsletter is now read by over 13'000+ incredible human beings counting our email list and LinkedIn subscribers. Reach out to contact@louisbouchard.ai with any questions or details on sponsorships or visit my Passionfroot profile. Follow our newsletter at Towards AI, sharing the most exciting news, learning resources, articles, and memes from our Discord community weekly.

If you need more content to go through your week, check out the podcast!

Thank you for reading, and we wish you a fantastic week! Be sure to have enough rest and sleep!

Louis