Good morning everyone! In this iteration, we talk about reasoning models!

Imagine you open ChatGPT and ask the old strawberry question: “How many R’s are in the word strawberry?”

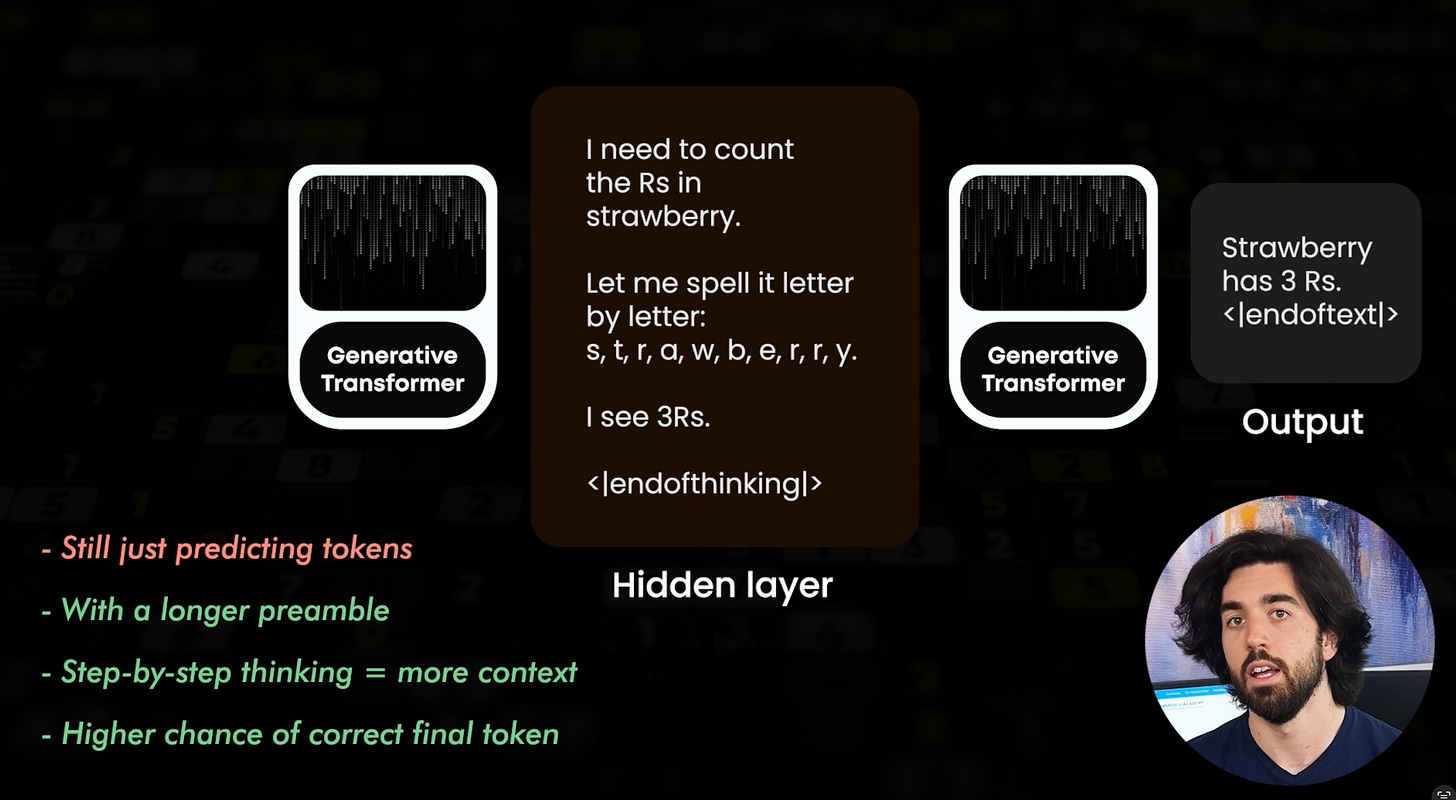

Two years ago, the model would shrug, hallucinate, or — if you were lucky — guess correctly half the time. Today, with the shiny new “reasoning” models, you press Enter and watch the system think. You actually see it spelling s-t-r-a-w-b-e-r-r-y, counting the letters, and then calmly replying “three”. It feels almost magical, as if the model suddenly discovered arithmetic. Spoiler: it didn’t. It has just learned to spend more tokens before it answers you. And it can afford that luxury because the raw capacity underneath has already ballooned from the 117 M-parameter GPT-2 (1.5 B in its largest flavour) that munched on eight billion WebText tokens to trillion-parameter giants trained on roughly 15 trillion tokens — essentially the entire readable web — spread across clusters of tens of thousands of H100 GPUs.

That tiny detour is the heart of the newest wave in large-language-model research. For years, we lived by one rule — scaling laws. Principles where the more we push them to their limit, the better the results are. More model parameters plus more data equals more skills. Bigger bucket, bigger pile of internet, better performance. But buckets don’t grow forever: we’re scraping the bottom of the web, and GPUs are melting data-centre walls. It seems like we are going into what many researchers call a “pre-training plateau.”

So, instead of tackling the consciousness issue, researchers turned around and asked, “What if we can’t scale the model any further? Could we scale the answer time instead?”

And here, reasoning models were born: same neural guts, same weights, but a new inference-time scaling law — more compute after the user clicks send with the hypothesis that if the results are good enough, we, the users, will be fine with waiting a few seconds, minutes or even more.

Here’s how it works in practice. Watch the video (or read the written article here):

P.S. The video is sponsored by HeyBoss, a new cool tool I'd invite you to look into ;)

And that's it for this iteration! I'm incredibly grateful that the What's AI newsletter is now read by over 30,000 incredible human beings. Click here to share this iteration with a friend if you learned something new!

Looking for more cool AI stuff? 👇

Looking for AI news, code, learning resources, papers, memes, and more? Follow our weekly newsletter at Towards AI!

Looking to connect with other AI enthusiasts? Join the Discord community: Learn AI Together!

Want to share a product, event or course with my AI community? Reply directly to this email, or visit my Passionfroot profile to see my offers.

Thank you for reading, and I wish you a fantastic week! Be sure to have enough sleep and physical activities next week!

Louis-François Bouchard