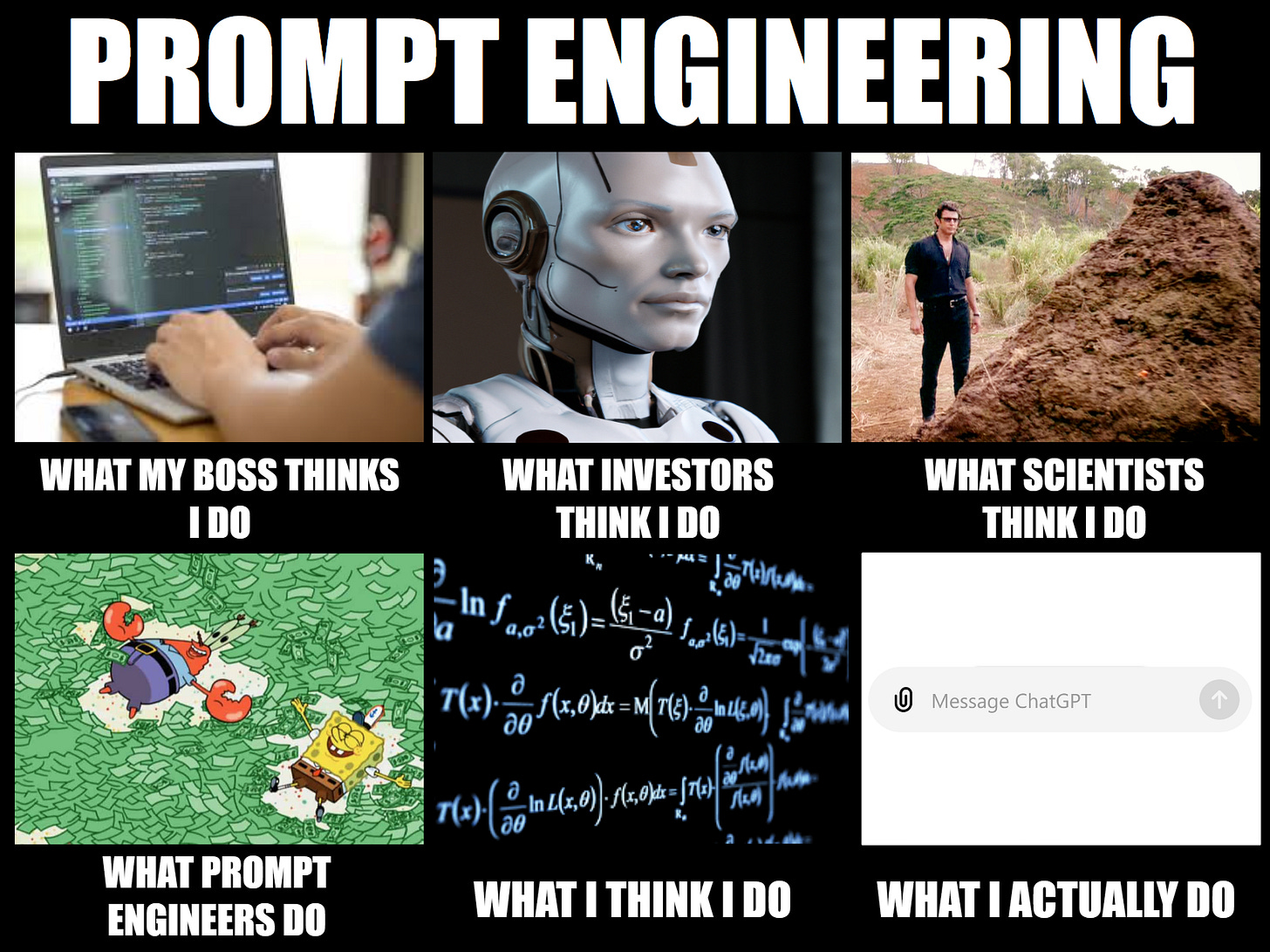

The Myth of Advanced Prompting: Making Simple Things Sound Complicated

The problem with prompting...

Good morning everyone!

Today's newsletter focuses on the current problem with prompting. I recently wrote a piece along with two friends for our weekly High Learning Rate newsletter (which you should follow) and since I had many thoughts and opinions in there, I wanted to share it with you as well.

In this iteration, we'll cover the basics and sprinkle in some so-called "advanced" techniques, which are mostly just common sense wrapped in fancy terminology.

Despite all the hype around "advanced" prompting techniques, it's really just about telling the model what you want in plain language.

It's all about good communication.

Giving directions—be clear, be concise, and maybe add some examples if you're feeling generous.

Here’s our short opinion piece on prompting…

This issue is brought to you thanks to Aiarty.

1️⃣ Rescue & Enhance Your Images with Aiarty -Get Your 1-Year License Free!

Elevate your visuals with Aiarty Image Enhancer! This user-friendly, fast AI tool enhances images up to 16K/32K resolution for multiple uses and supports batch processing. One-click auto processing: deblur, denoise, upscale, and add lifelike details. For effortless, high-quality image enhancement: test Aiarty, share a simple review, and win a 1-year free license and cash prizes from a $10K pool!

2️⃣ The problem with prompting... and what it really is

1. Understanding Prompts

A prompt is the input or instruction given to a model to generate text. Designing an effective prompt can significantly enhance the quality and relevance of the model's responses. But there’s nothing too complex. It’s just good communication—tell what you want clearly and concisely, and add examples if you can.

In their simplest form, prompts can be questions or instructions, e.g., asking a model to complete a sentence:

Prompt: "Complete this sentence: The sky is"

Output: "The sky is blue during the day and dark at night."

While this is elementary, more context or detailed instruction can lead to better-managed outputs. This is the essence of prompt engineering, where you optimize prompts for specific tasks to get the most relevant and accurate results. All the “advanced” techniques are pretty much overkill except the few we will mention in this iteration.

2. Basics of Prompt Formatting

Prompt formatting is extremely important for the model to understand what you want. Use bullet points or clear numbered lists to give your instructions or steps to follow. Just think of drafting the best Slack message for a new task for your colleague, and you should be good.

Most importantly: reread yourself and use Grammarly! Dumb typos transform words and affect how the models interpret them.

There are tools like Anthropic's Generate a Prompt feature or Cohere’s Prompt Tuner that can help. These tools optimize your initial prompt, making it more detailed and clear to improve performance on whatever task you want. But you still don’t want to depend on these tools and learn the essentials of prompting first.

For instance, a simple prompt like "Complete sentences users will give you" might be completely transformed into the following using Anthropic’s Generate a Prompt feature:

"""

You are tasked with completing sentences that users will provide to you. Your goal is to finish the sentence in a way that is grammatically correct, coherent, and contextually appropriate.

Here is the incomplete sentence:

<incomplete_sentence>

{{INCOMPLETE_SENTENCE}}

</incomplete_sentence>

To complete the sentence:

1. Carefully read the incomplete sentence and understand its context and tone.

2. Consider multiple possible endings that would make sense given the beginning of the sentence.

3. Choose the most appropriate and natural-sounding completion.

4. Ensure that your completion maintains the grammatical structure and tense of the original partial sentence.

5. Make sure the completed sentence is coherent and conveys a clear message.

Provide your completed sentence within <completed_sentence> tags. The completed sentence should include both the original incomplete part and your addition, forming a full sentence.

Now, please complete the given sentence:

<completed_sentence>

"""

3. “Advanced” Prompting Techniques

As we said, there is no such thing as an advanced prompting technique. Just learn to chat with LLMs and get what you want with trial and error.

The best thing you can do is:

Be clear.

Be concise.

Ask the model to give its reasoning steps.

Iterate (chain) with the model.

Here’s a bit more detail (with the proper names) about those only “techniques” you need to know…

Zero-shot Prompting, aka “Do this”

This is simply clearly telling what you want. Instruct the model without providing any examples. It's useful for straightforward tasks where the model has sufficient pre-trained knowledge:

Example: "Classify the following text as positive or negative: 'I had a great day!'"

Output: "Positive".

Note: ”Zero-shot” comes from literature and it is used to describe what a model is capable of without any additional information. It’s a way for scientists to describe the raw capabilities of a model. A fancy word for simple concept.

Few-shot Prompting, aka “Here are some examples”

Few-shot prompting is the best thing you can afford to do without retraining a model. It enhances the model’s ability to perform a task by providing a few examples (e.g. question-answer pairs) alongside the main prompt. This specificity helps the model understand the task better:

Format:

"Q: <Question>? A: <Answer>"

"Q: <Another Question>? A: <Another Answer>"

We usually give 3-5 examples of the question and/or answer, and it tells the model how it should behave. This approach is the “best bang for your buck” to execute a new task the model wasn’t trained to perform.

Chain-of-Thought Prompting, aka “Think before acting”

Chain-of-thought (CoT) prompting is probably the best method to make your LLM more “intelligent.” It does wonders. In CoT, we prompt the model to break down its reasoning steps. Clearly, the model is prompted to solve problems step-by-step, which is particularly useful for complex tasks like mathematical reasoning or generating comprehensive text summaries:

Prompt: "Let's think through this step by step to solve the math problem: 'What is 23 + 56?'"

Output: "First, we add 20 and 50 to get 70. Then, adding the remaining 3 and 6 gives 9. So, 70 + 9 equals 79."

It basically acts as a manual mechanism to replicate our thinking process, just like we would think before saying our final answer. The model generates the text bit by bit, and each time it generates a new word (aka token), it is added into the context along with the original prompt. This dynamically updated context helps the model “think” by decomposing the task step by step. This ultimately means that when you prompt a model, you force it to generate additional knowledge before answering and using it.

So when you ask the model to “Think before acting,” all the generated intermediate text (which are usually the initial steps or plan of action) are in its context, helping it “understand” the request better and plan before giving its final answer. Something all humans (should) do!

Chain Prompting, aka “Chatting”

Chain prompting just means iterating with the model. It is basically going back and forth with the AI to improve or correct its answer. You can do this either manually or with automated prompts. This has a similar goal as CoT but in a more dynamic way. The model will have more and better context, again allowing it to reflect back. It usually either uses yourself, other LLMs or APIs to “discuss” and get new outputs. It also allows you to add more dynamic content in the prompts depending on how the “discussion” (or exchange) advances.

Retrieval Augmented Generation, aka “Search before answering”

You can draw a parallel with Retrieval-Augmented Generation (RAG). RAG is just about retrieving the most relevant information in a database before prompting an LLM. Then, you simply add this retrieved information along with the user question in the prompt. Here, we basically add useful context to the initial prompt before sending it to the model.

Prompt: "Here is some context to answer the user question: <Retreived information>. Answer this question: <question>”

This helps the model answer the user's question with specific knowledge. You can add as much relevant text as the model can handle.

Obviously, some functions allow you to use the Internet, which is the same as an RAG database, but it is the Internet.

For example, with ChatGPT, you can ask the model to use its web search tool before answering. This is really efficient if the response you seek needs up-to-date information.

Prompt: "Which country has the most Olympic gold medals so far? Use the web search tool before answering.”

4. Output Optimization

Besides the prompt, there are other methods to improve output content quality and output structure.

For better content, you can adjust the temperature parameter to control randomness: lower values for more deterministic outputs and higher for more creative ones. You can also implement “self-consistency” (aka choose the most frequent answer) by prompting the model multiple times with the same input and selecting the most chosen response.

Regex checks after the generation can be used to ensure the model output respects a certain format. For example, you could hide the generation of a URL for security reasons if you build an application for your customers by spotting the “http(s)://www…” or identifying a domain like “towardsai.net”. Another example would be to check if the output respects the JSON format.

Constrained sampling (aka blacklist words) is another similar concept that can be used where you tell the model which word or part of words to blacklist from the vocabulary of an LLM at generation time. With this method, the model won't be able to produce the blacklisted words and, therefore, can only generate desired words. The approach allows precise control over the output format with minimal performance impact because it simply filters words during generation (compared to post-generation, which could be done with the regex check).

Note: This method requires total access to the model. You can use llama.cpp to apply this technique with an open-weight model like Llama 3, but it cannot be used with an API-accessed model like GPT-4o.

With OpenAI and most other big LLMs, you can use tool (function) calling. Not all models can do that since training the model in a specific way is required. In JSON mode, LLMs are trained to generate outputs formatted as valid JSON, while function calling allows you to provide a function signature, and the model then returns the arguments to call that function in valid JSON format.

When experimenting with these approaches, consider not only the trade-offs between creativity, accuracy, and structure but also the capabilities of your chosen LLM. For instance, you might combine temperature adjustment and self-consistency to improve content, then apply an appropriate structuring method based on your LLM's capabilities and your specific needs, which will change if you switch from Llama to Claude.

And that's it for this iteration! I'm incredibly grateful that the What's AI newsletter is now read by over 16,000 incredible human beings. Click here to share this iteration with a friend if you learned something new!

Looking for more cool AI stuff? 👇

Looking for AI news, code, learning resources, papers, memes, and more? Follow our weekly newsletter at Towards AI!

Looking to connect with other AI enthusiasts? Join the Discord community: Learn AI Together!

Want to share a product, event or course with my AI community? Reply directly to this email, or visit my Passionfroot profile to see my offers.

Thank you for reading, and I wish you a fantastic week! Be sure to have enough sleep and physical activities next week!

Louis-François Bouchard

Really cool article. Definitely takes out a lot of FOMO that I used to have about prompt engineering.

One content question: is there any particular reason for which you use the word blacklist instead of blocklist?

Anyhow, keep up the good work.